We are in the midst of a digital revolution that is affecting all areas of life. A significant driver of this revolution is artificial intelligence (AI).

One of the big growth areas for AI is talent management.

The advantages that AI systems offer HR departments are obvious: Especially where processes can be automated, artificial intelligence plays to its strengths. For example, the time spent on resume screening can be reduced by up to 90% through the use of AI, according to experts.

Legislators refer to areas such as talent management as high-risk areas, meaning that people can come to harm here through the use of artificial intelligence – be it health-related, professional or otherwise. So to minimize the risk of AI causing harm in high-risk areas, it must meet not only technical but also ethical requirements.

The use of AI in talent management therefore requires nothing less than a sharp definition of ethical corporate values – something that many companies have yet to take for granted.

Today, corporate ethical guidelines are primarily a statement of intent and sometimes not even that, but merely a marketing strategy to convey a certain external image. Artificial intelligence cannot work with declarations of intent, however; it needs measurable ethical standards, including for fairness. But: what is fair? When asked this question, three people will give three completely different answers – not to mention companies with several thousand employees.

We have spent the last eight months researching the topic, interviewing experts around the world, and developing a practical AI ethic. The study is expected to be published at the end of April. To be notified of its release, subscribe to our newsletter here.

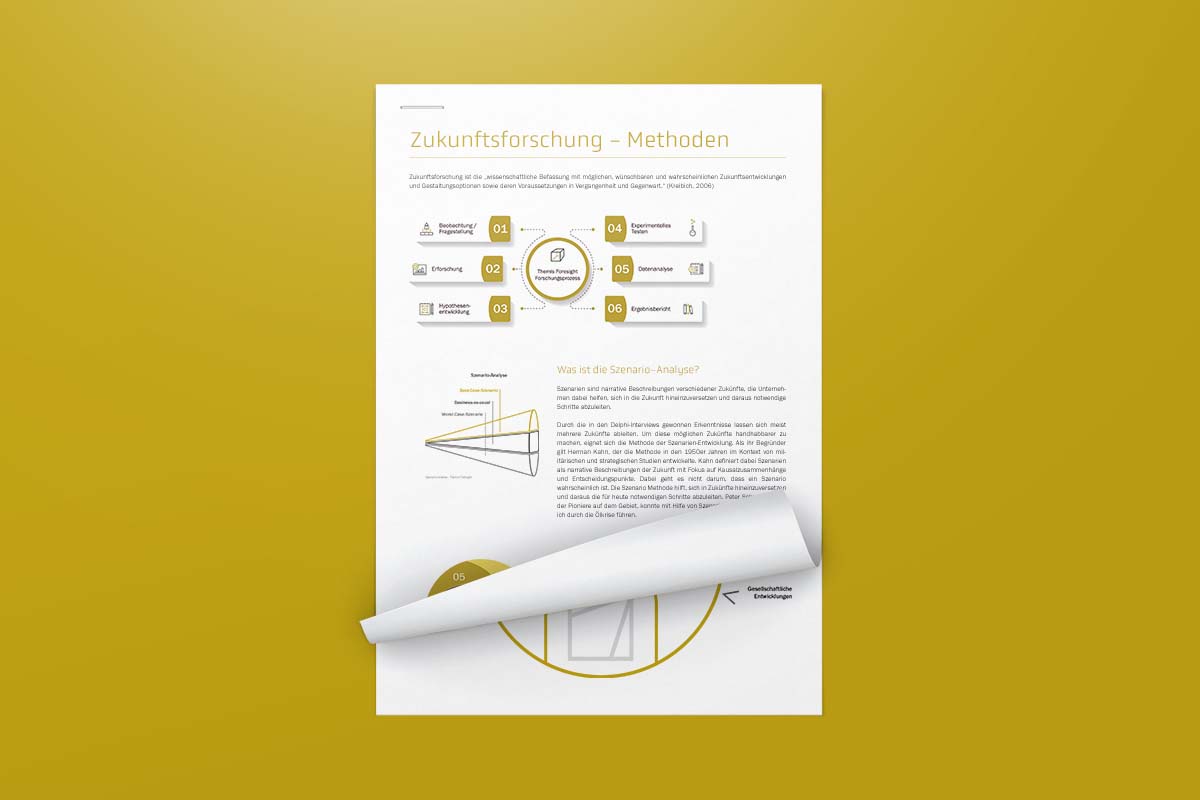

To put the finishing touches on the study, Jan Berger and I met with Konstantin Katsikis of KWADRAT advertising agency in Berlin last week and worked out a design concept for the study.

Together, we are also developing infographics to make complex technical topics clear for CHROs and business leaders.